Yo! I’m really stoked & feeling very thankful I was able to make this happen and spring for one of these. I’m hoping this is alright usage of the forum, but you can let me know if I’m off. I’m not even sure if anyone will actually read this, but ah well. I’m buzzing about this thing so I’ll do it regardless! This is a “first impression”.

I have the base spec of the new AMD AI 13 inch:

OS: Arch Linux x86_64

Host: Laptop 13 (AMD Ryzen AI 300 Series) A5

Kernel: 6.16.7-arch1-1

Uptime: 21 hours, 44 mins

Packages: 1584 (pacman), 86 (flatpak)

Shell: zsh 5.9

Resolution: 2880x1920

DE: Plasma 6.4.5

WM: kwin

Theme: Sweet-Dark-v40 [GTK2/3]

Icons: Papirus-Dark [GTK2/3]

Terminal: WezTerm

CPU: AMD Ryzen AI 5 340 w/ Radeon 840M (12) @ 4.900GHz

GPU: AMD ATI Radeon 840M / 860M Graphics

Memory: 23077MiB / 31371MiB

A few notes:

- I am running some closed software/firmware, but try to lean towards open. I know that’s as good as running completely closed to some but that is my config.

- Yes, I do have 32gb ram in the base model. On top of that, it’s not dual channel. I feel like this is probably enough to give the base platform a fair shake?

- You’ll notice I have the upgraded 2880x1920 120 hz panel.

- I’m just running everything off of a single 1tb NVME, BTRFS running with compression.

- Arch base, Plasma desk env. Framework NEEDS to look into project banana/KDE Linux, I think it is in alpha right now. They probably already do have their peepers on it I’m guessing.

Personal experience:

I think it’s important to note here that for me paying twenty two hundred bucks for a brand spanking new laptop is face-meltingly expensive. I understand supply chain enough and I’m actually surprised and happy with the value here, but I just don’t have great financial security, like a lot of people right now. I’m coming from a Lenovo t490s I rescued from a lethal soda spill, and the desktop I’m using is an intel i7-6700 with an rx480 and a RTX 3060 12gb, 32gb ram. I also use a bosgame p3, a Surface 7, and a couple chromebooks a lot. All of them are running either Arch, Alpine, Debian, Fedora, or Bazzite at any given time. I also love to tinker with the RPi ecosystem. I’m a technical artist that teaches digital media arts mostly, but lately most of my time is actually developing agentic AI frameworks for both art installations and disability aid. To that end, I am also very, very, VERY excited to say an artist I’m working with has decided to use the 128gb RAM framework desktop as the platform for one of her ML installs, and expect to have my grubby lil’ mits on one in… November, I think?

Linux experience:

I don’t know what first class is, but I cannot imagine anything being better than this. I have installed Linux on SO. MANY. CONFIGS. I have fought through so much bizarre behavior for so many hours, including power tables being messed up causing seemingly undiagnosable and critical system instability, obviously I’ve seen firmware problems. I’ve been configuring this install for 4 days or so. Lots of hours into it, more than makes sense. I am picky, and all my servers are dead and waiting for fixing I cant afford, so no deployable configs/I’m a freak is the actual reason. Don’t be afraid to throw Fedora on this and go to town.

Even building things this spec probably shouldn’t be asked to it’s just been really wonderful. Plasma is a dream, all of the firmware is just incredible, with hardware control like the keyboard backlight and functions work out of the box like a proprietary solution. IT. RULES. Bios rules, battery limits are configurable, most things you’d ever want are made accessible in it. I think the experience for disabling secure boot could have been maybe better explained? A quick web search took me out of disneyland for a second but I was right back after that.

Hardware VAAPI? Flawless. Video editing in Kdenlive? Totally doable on base spec. And running whisper for it’s ML functions? More on that later. Full audio editing with great latency in Ardour? Didn’t break a sweat even with multitrack, lots of DSP, and low latency. Hell, it didn’t even mind running easyeffects over the standard pipewire instance over the output of the DAW, instead as well as ALSA. Simply stupid. I was using a set of DT 990 pros with the included (not even a module, thank you fw) headphone jack, and immediately jumpscared myself. WHAT is WITH the amp in this thing?

Hardware:

I have SO much good gain from the headphone out on this setup, I am SHOCKED. It is so much more than possible to be in a creative workflow with this thing and leave ample headroom in your audio output. What I mean is in a situation where you’re working multitrack before doing any work with dynamics and have everything gain staged to give you lots of headroom, you can just rely on pumping the gain with the amp on the headphone output to accommodate, I’m talking mixing worst case with headphones that are known as hard to drive, low output headphones. All that, and I’m not passing 50% gain, when on most laptops I’m having to throw a damn brickwall limiter on my master to hear what I’m doing. Literally 10/10, and wasn’t at all expecting it, which also extends to my thoughts on the speakers.

8/10? good!! great! I was… expecting them to be crap. I kinda still thought they were when I was halfway through configuration. “eh”, kinda vibe. But for those who don’t know, most systems with speakers this big NEED to be tuned. It’s just a matter of physics, a speaker that becomes small past a certain point need to have DSP, or basically effects before the sound hits them to compensate. If you are just sending raw signal to a speaker, it would have to be very expensive to sound good. Modern systems, including almost all windows systems, employ various techniques to get computers to sound good. This includes dolby processing, dynamics processing, filtering/exciting/eqing, and most notably probably is convolution and impulse responses.

I’ll talk about that more later but for now: I have easyeffects running with a DIABOLICAL EQ. It should NOT exist and it IS a crime I should be punished for. But damn. It. Sounds. Awesome. Basically, if you try to stick to making changes using reductions instead of amping gain, and pay attention to where the bass cuts off and where there’s unwanted resonances in the chassis (400-600hz?) while scooping some unwanted mud and then pushing underrepresented mids and throwing some sparkle you can get like 80% of the way there. Laptop speakers aren’t flat, so go for pleasurable embellished listening instead of flat without being fatiguing IMO. Boy, these things just took, and took, and took, and I just kept pushing and pushing and… work needs done here. These things are actually fun little drivers and I think it’s really sad there’s a lot of Linux users out there who are probably just left in mud city and don’t even know.

I’m talking musicians with their Macbook pros that say “Yeah but I just can’t let go of the speakers nothing sounds even close to as good as apple” being seriously challenged level decent to my ears. It’s good enough for whatever, honestly. It has enough gain that even without running limiting afterwards and giving extra headroom for spikes of the wild eq, it’s loud enough for general usage if not loud enough to do small workshops WITH the corrected audio. The Linux experience is so snappy even with the base model I would say slam easyeffects on over everything and start it on startup, it doesn’t even matter. I’ve tried carla and wiring lsp stuff but it’s not as reliable and more difficult from what I can see. Anywho, if you wanted to run a less conservative chain where you maximized for volume or even excited or multiband compressed this sunnovagunn to get improved dynamic response and stuff? Like I wonder even if we could get some sort of like BBE style playback correction/cider music processing style or whatever listening exciters going, you know? AH! just gets me so amped, but I have to stop talking about it for now! More later!

On Machine Learning:

I know this IS the bottom spec, but… I mean it got AI in the name don’t it?

Alright, so I’m having interesting findings here. That’s probably what most expected, no clear answer on AI performance with a new product. First, lets mention that the NPU this device has on board is effectively not supported by Linux past reading that is IS indeed there, which I think is AMDs fault? Dunno. That being said buying hardware that is essentially a brick in the middle of your chip if you’re a Linux user…. ouch. I’d really, really, really like to be using those 50 TOPS. On top of that, almost all AI is, for those who don’t know, centered around Nvidia GPUs and their proprietary CUDA library to run all of the calculations AI relies on. (boo) ROCM is AMD’s equivalent library, and it is not meant for RDNA/consumer igpu hardware architecture from what I gather rather quickly, and that’s what we are running I think? Work is fast I gather but that doesn’t mean much right now.

So that being said, all of these thoughts are with the caveat that ML is computed using brute force CPU or Vulkan backend (yes, the video rendering pipeline) to utilize the GPU. Another thing worth noting: with 32gb the bios lets me hard allocate up to HALF of it (hell yeah) to the VRAM. It’d be nice to have more granularity over the provision, but whatever. This is important because if an app is trying to calculate the pool it has for ram and the standard allocation is 512, the unified memory promise will…. mostly… kind of work. Kind of. In my early testing it really just seemed like using unified memory regularly resulted in things being much less stable and much less allocatable than in 16gb. I think I’d seriously consider throwing another 32 gig stick in the base model to allocate it completely to the GPU as absolutely ape s*** as that sounds, depending on how things shake out.

ALL of that being said? WOOF. This thing… is fast. You can pretty trivially push huge models like LLMs, notably at the moment GPT’s OSS 20b and Qwen3 30b3a are both happy as a clam initializing 8k context windows and generating at VERY usable speeds, while leaving the rest of the computer actually usable during generation. WTF. W.T.F. That is so close to being SO useful for local code generation and beyond that I’m going to have to do way, way more testing, and I didn’t expect it to be even near viable. Running whisper, or small local models, like Jan? You’re laughing. Image generation is a little bit less fun, normally having to just default to cpu and take MASSIVE performance penalties as we just aren’t as far as we are with optimizing LLMs, but I will have to do more research on ROCM or vulkan backended comfyui builds or whatever, and plus you totally can use Koboldcpp or by extension something like Gerbil to generate images fairly easily on this platform.

Almost done…

Things I did not check out enough:

Gaming performance.

This highres screen pushing 120 frames felt like a stupid idea with the bottom spec. I’ve bought i3 surfaces before (I know, silly) and so I was worried. However, it does push them during desktop use, and to my delight, light games DO run full resolution at this speed. Things like slay the spire, puyo puyo tetris 2, cultist simulator, Balatro, Stepmania, I hand tested with mangohud and yes, they are ALL hitting 120hz with max resolution on the base spec of this machine. Games like Hades II run at reduced resolution (I forgor the step below the native res of this that’s the same aspect but that) at 120hz super solid on medium settings. I was NOT expecting that, and so I am going to be doing more testing on emulation and poking around at the limits of what is possible here and considering FSR implementation or something to see if I can’t squeeze a bit more. I suppose y’all on Bazzite are already laughing it up, but I gotzta werk on this thing! This is my daily! I actually do run Bazzite in desktop mode a lot on my HTPC but DO NOT TEMPT ME!

The screen.

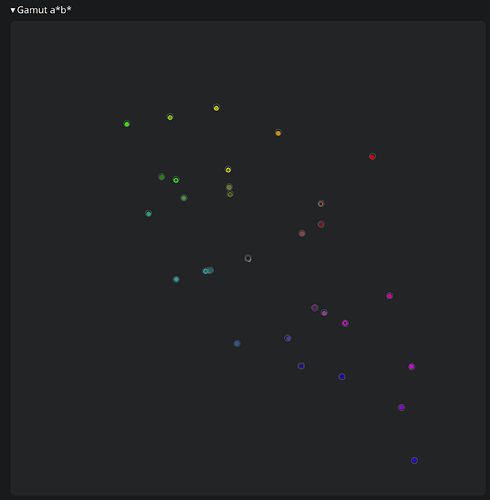

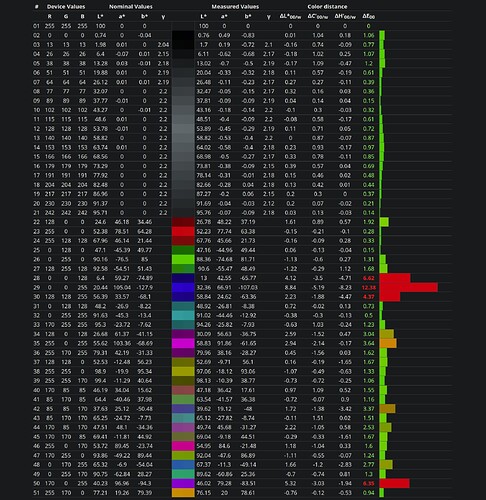

Yes, it’s pretty punchy and pretty bright. Yes, it is… so… so much better than a 2019 thinkpad. Yes… the bottom corners are… smaller than the top rounded corners? Second WTF of the post. But… the fact that I’m even talking about it is bonkers, because the price of upgrading it was so accessible that someone like me actually gets to upgrade! 180 bux? dude! That is so FRIGGING AWESOME. I don’t want to comment on color or motion clarity yet, or it’s application as a creative monitor, because I have a colorimeter (spyder5) that I will be using to check things out soon. That being said… 120hz go brr and it is pretty sharp. Definitely enough for punching text out, that is for DAMN sure. It’s fun but I don’t think it’s technically the best and I’m glad it isn’t for accessibility. But yes, I’ll be back with numbos on my specific panel, maybe probably. Also if I have these goofy rounded corners PLEASE gimmie a touchscreen. Linux touchscreen support is good enough, and a system this snappy would be so amazing in Plasma with its multitouch virtual desktop management stuff. Y’alls trackpad works well for that already, too, so thanks for that. That being said, a touchscreen really helps make the most of a single screen and makes a laptop a productivity monster, I believe it seriously makes a huge difference.

If you actually read all of this: gold star.

I have just a few questions and main points that stick out to me. I’ll be looking into all of these myself more when I have time, likely. But if you’ve made it this far, you may actually know:

- The biggest sticking point with LLMs at the base model? Forward pass text encoding.

I’ll need to poke around more, but I’m getting awesome speeds without context, and then actually encoding large prompts (6k, 8k, 10k, 16k, 21k, 32k) with all sizes of models while using 512mb unified vram is… unusable, sadly. VERY sadly. I don’t know what’s up. I’ve tried all different kinds off offload on gpu and cpu and splitting, I’ve put probably 5 hours in or whatever, but I haven’t gotten anything really… usefully working with a larger context. Which… hurts. User error? Inherent limitation of such low spec hardware? May need to just limit to workflows without large ingest steps, which is still SOME uses. Cool still, but so close to MORE.

- The audio situation. What is Framework doing for DSP on their windows installations? Do they have lab recorded (or honestly just buy a mic and do a sweep in the office recorded lol) impulse responses for the frequency response of the speaker/hardware config combos? Does anyone? Has anyone run it in a convolution plugin in Linux easyeffects? Do we have official frequency response graphs? Does it make sense for them to release some of this stuff or a guide for linux speaker tuning official recommendations? Advanced optional setup guides, that are potentially more vague but give great information and jump off points? I don’t have a measurement mic or you already know I’d be all over it, and I don’t have the hundred bucks to throw at it as much as I wanna. I think this is a pretty important step, actually.

Maybe there is some sort of community effort, or maybe even official package that framework can start to provide. Could be something as simple as easyeffect presets, or an ultra lightweight DSP chain using open source plugins, generated thoughtfully using measurements of each hardware iteration, and assign themselves based on the hardware you’ve got? Just have a fairly conservative but still night and day tuning profile for each general revision? I mean it’d just make the laptops sound SO much better, and if there was like… an appimage or flatpak? maybe even something we could host on package managers? even just a .sh sorta thing that guides the user and sets up based on distro and desktop env in cli, I dunno. It’s just so night and day and I’d love to see it more accessible, somehow.

Anywho if nothing but an online repository for my thoughts and tests in this laptop… have this long ass post